Blog

AI Resources Blog: Teaching Students (and Ourselves) about AI

Photo credit: Glenn Koelling

Glenn Koelling, Undergraduate Engagement Coordinator, University Libraries

Current generative AI tools are less like working with an assistant and more like working with a wizard performing magic. This observation comes from Professor Ethan Mollick’s September 11th, 2025, newsletter called One Useful Thing. It’s a wonderful newsletter where Mollick shares his observations of and experiences with AI and how it functions in the world. This particular observation of AI wizardry struck a chord with me.

I coordinate UNM Libraries’ Undergraduate Engagement Team at the Albuquerque campus, and I work primarily with undergraduate students, helping them navigate the research process. As a librarian, I’m very interested in how our students interact with information. This past year, I’ve noticed that they (and the population in general) are increasingly talking about using gen AI tools like ChatGPT as information sources. They ask it their questions, treating it as a trusted source.

This unsettles me because gen AI is not the same as our other information sources where we select what we want to use from a collection, often a search results list. Instead, it provides an answer (unless directed otherwise). Mollick conceptualizes older generation gen AIs as an assistant; they needed a lot of human input and their information was so often wrong. Current gen AIs are more like wizards. They create information and do things with it – and they don’t need us nearly as much.

Mollick puts some of my unease into words: “Sometimes the AI’s work is so sophisticated that you couldn’t check it if you tried. And that suggests another risk we don't talk about enough: every time we hand work to a wizard, we lose a chance to develop our own expertise, to build the very judgment we need to evaluate the wizard's work.” Determining when to trust something – especially on the open web – is a hard skill to build. It takes experience with different types of sources and content to be able to pull out likely results. Chatbots are easier to understand than a search result list, but its answers often lack the necessary context for us to make a determination of the answer’s value.

Mollick continues:

This is the issue with wizards: We're getting something magical, but we're also becoming the audience rather than the magician, or even the magician's assistant. In the co-intelligence model, we guided, corrected, and collaborated. Increasingly, we prompt, wait, and verify… if we can.

Human mediated experience becomes computer mediated likelihoods. I worry that students who rely on chatbots to answer questions are losing the chance to develop their experience with a variety of information sources and, consequently, their own bullshit detectors.

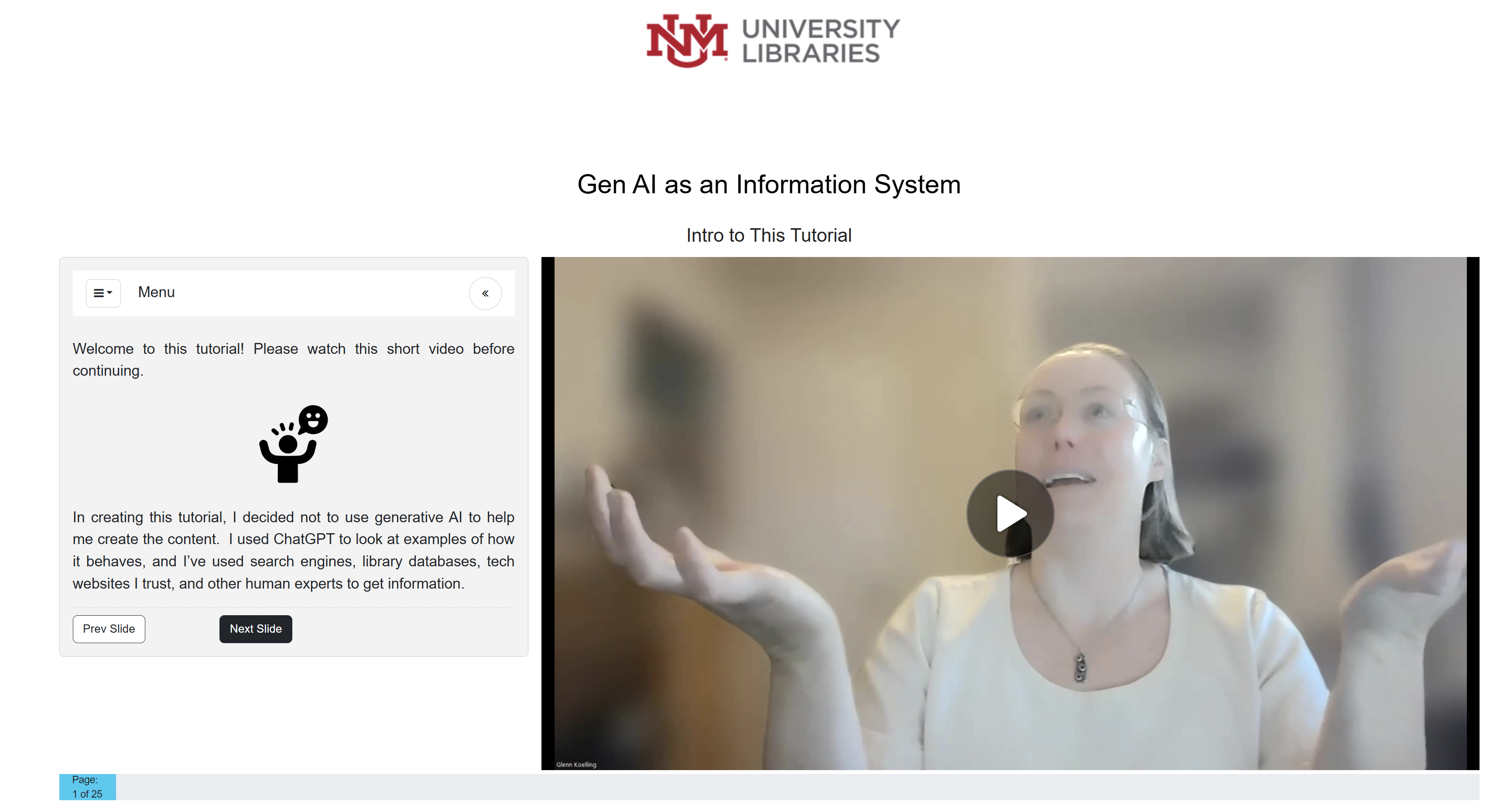

This conundrum has been top of mind for me. We (the Undergraduate Engagement Team at the University Libraries) recently rolled out a suite of tutorials to introduce students to key library skills and information literacy concepts. All these tutorials can be mixed and matched, and each has a certificate of completion that students can turn in. I opted to design a tutorial on Generative AI as an Information System (https://libguides.unm.edu/Gen_AI). This tutorial covers the basics of three information systems: generative AI chatbots, internet search engines, and library databases. It goes over how each system works, the differences between them, what each are good at, and generative AI & ethical information issues. By the end of this tutorial, students will be able to define key terms; choose which info system will suit their needs; identify pros and cons of AI, search engines, and library databases as information systems; and explain how gen AI works at a basic level.

My hope is that instructors will assign this tutorial as homework for their students so that they can make informed choices about what information tools they use. And my hope for all of us is that we choose to be magicians rather than assistants.

-----------------------------------------------------------------------------------------------------------------------------------------

Nepenthes: The Maze Code Trapping AI Bots in Their Own Web, Its Environmental & Ethical Implications

Photo Credit: Sonja Kalee

Luc Biscan-White, Project Assistant, University Libraries

As artificial intelligence (AI) crawlers continue to scour the internet to gather data for training large language models (LLMs), website owners face growing challenges in protecting their content from being scraped. A recent preemptive, and deliberately disruptive, solution emerged in the form of Nepenthes, an open source “tar pit” code which causes AI training bots to fall into an infinite maze of randomly generated webpages designed to waste their time and computational resources.

Nepenthes takes its name from a genus of carnivorous pitcher plants (family Nepenthaceae) that trap and consume their prey via a liquid “trap” filled with sticky digestive enzymes, aptly reflecting the program’s defensive protocol. Created by a pseudonymous coder known as Aaron B., Nepenthes generates pages filled with dozens of random links, each pointing back into the “maze” itself. Web crawlers, which typically operate by downloading URLs they find on each page, become stuck chasing an endless trail of links. However, these links all lead nowhere but more of the same labyrinth of blank webpages.

Aaron B. explains:

“It’s less like flypaper and more an infinite maze holding a minotaur, except the crawler is the minotaur that cannot get out. The typical web crawler doesn’t appear to have a lot of logic. It downloads a URL, and if it sees links to other URLs, it downloads those too. Nepenthes generates random links that always point back to itself—the crawler downloads those new links. Nepenthes happily just returns more and more lists of links pointing back to itself.”

This defensive code doesn’t just deflect unwanted data scraping; it actively wastes the scraper’s bandwidth and processing power, potentially deterring or even throttling the resources AI companies invest in unrestrained web crawling. Nepenthes can be deployed “defensively” to shield a site’s real content by flooding the crawler with junk URLs or “offensively” as a honeypot that traps and burns AI bots:

“Let’s say you’ve got horsepower and bandwidth to burn, and just want to see these AI models burn. Nepenthes has what you need … let them suck down as much bullshit as they have disk space for and choke on it.”

More on the technical notes, Nepenthes produces thousands of static pages, each with many outbound links connecting back into the maze, ensuring no exit path exists. The text that populates these pages is referred to as Markov babble, that is in machine learning, a statistical method producing chains of words that mimic natural language patterns without meaningful coherence. While the web crawler trap set by Nepenthes wastes crawler bandwidth and computational cycles, Nepenthes throttles content generation and uses static files to keep server CPU load manageable. Meaning, that while there are potentially thousands of links created through this code, the files that are created and scarped content minimum actual content. This allows for the code to keep the web crawlers scarping, while not overloading the host server and resetting the crawler itself, potentially sending it out of the maze code. While the Nepenthes code is clever it the relative simplicity of its design, web crawlers are advancing quite rapidly. Some are even capable of detecting and bypassing Nepenthes or similar tools, prompting innovations such as reverse proxy implementations (security protocol against malicious code like Nepenthes) or other techniques to maintain effectiveness.

Considering the reports coming out about data centers needing more water to cool AI host servers directing water needs from areas facing higher levels of drought susceptibility, Nepenthes provoke increased activity from those data centers. What are the environmental trade-offs associated with confusing and trapping code like Nepenthes does? Does deploying such resistance tools exacerbates environmental harm, given that AI training and crawling already have substantial carbon footprints?

Crawlers caught in Nepenthes’ infinite loop consume more electricity and compute cycles than if they were simply scarping website to website and moving on. Most of these servers mostly run on energy-intensive cloud infrastructure. This indirectly increases carbon emissions. The near endless downloads from Nepenthes blank sites inflate network activity, necessitating increased backend infrastructure and increasing environmental strain. While Nepenthes raises the barrier, and cost, to aggressive unauthorized scraping, it risks sparking incentives from AI companies to escalate resource consumption, leading to a cascading race on both ends to catch the other.

With these concerns in mind, there is still an argue that this friction may pressure AI companies to adopt more ethical and efficient data sourcing methods, potentially reducing reckless scraping in the long term.

Nepenthes capture a striking intersection of technical ingenuity and cultural resistance our quickly emerging digital era of AI. By exploiting the predictable mechanics of simplistic web crawlers, it empowers website owners to reclaim some control over their content, even if temporarily.

However, the tool also illustrates the challenging tradeoffs between ethical resistance, environmental impact, and the escalating back-and-forth with ever-evolving AI scraping technologies. It highlights the urgent need for transparent AI data practices, sustainable technology development, and new norms respecting digital content ownership.

In the march toward AI progress, the question of respect and ethics for creators’ rights is the burning question. Nepenthes and similar coding tactics are important but partial steps toward hopefully providing enough tension to generate real solutions in our current digital moment.

Sources:

Koebler, Jason, 404 Media. January 27, 2025. “Nepenthes: Infinite Maze Traps AI Crawler.” https://www.404media.co/developer-creates-infinite-maze-to-trap-ai-crawlers-in/

Gigazine, January 27, 2025. “Infinite Maze Traps AI Crawler Nepenthes.” https://gigazine.net/gsc_news/en/20250127-infinite-maze-traps-ai-crawler-nepenthes/

Nicoletti, Leonardo, Michelle Ma, and Dina Bass, Bloomberg. May 8, 2025. “AI is Draining Water from Areas That Need it Most.” https://www.bloomberg.com/graphics/2025-ai-impacts-data-centers-water-data/

-----------------------------------------------------------------------------------------------------------------------------------------

Thirsty Algorithms: Why Measuring AI’s Water Use Is Still a Murky Science

The dried riverbed of the Rio Grande also the Bosque in Albuquerque, New Mexico in August 2025.

Photo Credit: Luc Biscan-White

Luc Biscan-White, Project Assistant, University Libraries

AI’s water usage is enormous and growing. There is still no single, consistent metric by which its true resource impact can be measured or compared across data centers, models, or even individual prompts. This inconsistency obscures accountability and hampers both research and regulation, even as data center expansion strains local water supplies worldwide.

Quantifying AI’s water use involves a complex web of variables, starting with operational consumption (the water directly used for cooling servers during model training and inference), supply chain “embodied” water (for hardware manufacturing), and off-site water used in generating electricity for powering data centers. Each category is affected by local climate, cooling technology, site infrastructure, regional energy mix, and reporting transparency.

Even the most used metric, like Water Usage Effectiveness (WUE), or liters of water per kilowatt-hour of energy, is fraught with problems. Different companies report different averages (e.g., Meta cited 1.8 L/kWh, Google and Microsoft report different site averages), but there is little public accountability. As one Institute of Electrical and Electronics Engineers (IEEE) speaker, put it:

“Many companies use energy consumption as a proxy for water use, but these methods have an error margin of up to 500%.”

Large data centers can consume millions of liters per day. But depending on site infrastructure and cooling method, actual usage varies dramatically:

- Hyperscale data centers (Google, Microsoft) report annual withdrawals in the tens of billions of liters (source)

- Smaller centers can use anywhere from 6 to 121 million liters annually, with vast disparities even within the same metropolitan area

- Per-prompt, estimates for popular models like ChatGPT or Gemini range from 0.26 mL (Google’s most recent figure) to 500 mL per 20-50 prompts (source), with usage potentially higher for longer prompts or less efficient deployments

AI’s indirect water footprint (linked to electricity generation at coal or nuclear plants, or vast water-intensive chip production plants like those in Taiwan) is even harder to encapsulate yet potentially dwarfs the operational consumption.

There is no industry consensus on what should count as “water use” for AI. Should the metric be that only water directly lost to evaporation? Or all water withdrawn and returned? Should it include supply chain (manufacturing) water? Is a per-prompt average meaningful when model size, hardware efficiency, local temperature, and cooling systems all vary?

As the authors of Making AI Less Thirsty note:

“Water and carbon footprints are complementary to, not substitutable for, each other. Optimizing for carbon efficiency does not necessarily result in—and may even worsen—water efficiency...”

Similarly, as summarized by Jegham et al. in How Hungry is AI:

“Existing frameworks either lack the ability to benchmark proprietary models, the granularity needed for prompt-level benchmarking, or are constrained to local setups, failing to capture the infrastructure complexity of production-scale inference. This opacity undermines both scientific benchmarking and policy efforts aimed at regulating AI’s true environmental cost.”

Without standardized, transparent measurement and reporting, it’s nearly impossible for researchers, regulators, or the public to compare the water footprints of different AI models, companies, or geographic regions. As result, water consumption estimates can differ by orders of magnitude. Particularly vulnerable communities, like especially those in water-stressed regions, may face unexpected shortages as new data centers open. Tech companies sometimes even tout “water positive by 2030” goals without open, verifiable metric.

Experts urgently call for holistic, transparent, and standardized reporting across all categories of water use. This sentiment is increasingly echoed in policy papers, peer-reviewed research, and advocacy groups. The path toward sustainable AI is blocked not only by resource intensity, but by measurement opacity. And while some companies are making initial efforts to display transparency (Google), the numbers reported should be evaluated with scrutiny.

“The urgency can also be reflected in part by the recent commitment to Water Positive by 2030 from industry leaders, including Google and Microsoft, and by the inclusion of water footprint as a key metric into the world’s first international standard on sustainable AI to be published by the ISO/IEC.” (Making AI Less ‘Thirsty.’)

There is growing awareness about AI’s rising water footprint. Unless transparency and consistency in measurement takes center stage, efforts to rein in AI’s environmental impacts will be undermined by confusion, opacity, and unreliable benchmarks.

Sources:

Making AI Less Thirsty (Li et al., 2025)

How Hungry is AI? Benchmarking Energy, Water, and Carbon Footprint of LLM Inference

AI's excessive water consumption threatens to drown out its environmental contributions (Gupta et al., 2024)

AI's hidden thirst or how much water does Artificial Intelligence really drink - Solveo

Measuring the environmental impact of AI inference - Google Cloud

Data Center Water Usage: A Comprehensive Guide - dgtlinfra

The hidden cost of AI: Unpacking its energy and water footprint - titcc.ieee

The Secret Water Footprint of AI Technology - The Markup

In a first, Google has released data on how much energy an AI prompt uses – MIT Technology Review

-----------------------------------------------------------------------------------------------------------------------------------------

10/17/2025: Dispatch from World Summit AI

Margo Gustina, Assistant Professor, University Libraries

World AI Week was in Amsterdam (NL) last week (October 6-10), with the World Summit AI as its marquee event. For two days, thousands of attendees listened to and engaged discussions of AI impacts across social dimensions like workforce, education, civic structure, and individual relationships. With regular nods to the very real concerns about the current corporate AI oligopoly, the overall tone for the week was that of an excited pep rally for an AI saturated future.

A recurring theme among engineering presenters and firm exhibitors is that 2025 is the year of AI Agents. In Google’s free and self-congratulatory publication Forward (given to every attendee), the Public Worldwide Examples piece was really a collection of AI agent use-cases. Most of the exhibitors and many of the technical presenters (in the Tech Deep Dive track) were there with agentic engineering innovations, code-sharing mechanisms, out-of-the-box agent development platforms, and personal data security agents. While a broader complaint about AI is that no one needs generative AI, use-specific AI agents is the innovation that public and private sectors are demanding. Most startling for me, as a new-to-AI librarian, was how “easy” developing effective custom solutions has become.

While software development innovations abounded, the robotics dimension of AI, where hardware and software need to be co-developed and deployed, is moving more slowly. Surprisingly, the constraint in the intelligent autonomous mechanical beings sector is data availability. AI models are mostly some kind of language model trained on text (and text descriptions of images). In robotics, innovations in data types and modeled understanding of video data types are needed. According to Jennifer Ding (ML Solutions Engineer at Encord), if a robot is being designed to wash dishes a video dataset of dish washing is needed. Encord and other firms help develop those data by hiring human actors to do the activity they wish to train robots to do, filmed from a variety of angles with detail shots of movement physicality.

In the same robotics panel discussion, Limor Schweitzer (founder, MOV.AI) noted that the demand for robotics was a demand for unpaid labor. While there was an arguable use-case for hazardous employment that makes pro-human sense, like oil rig and underwater mechanics work, all panelists agreed that home health care companies were the most eager for their products to be ready for deployment. Multiple sessions were run by pro-efficiency industrialists who talked about AI deployments as helping them move to “dark factories”, places where only automatons operated. Although these presenters took the time to talk about how this meant that their human workforce was given more time to interact with human customers, where the goal is maximum profit it’s easy to see a future with no human interaction at all. As Sharon Sochil Washington (founder, MASi) noted, as language model trained interfaces become primary education and medical interactions for people worldwide, the base data used to train the AI, will mean hundreds and hundreds of cultures won’t be represented in that future.

Karen Hao (author, Empire of AI) gave the opening keynote for World Summit AI. She exhorted the audience to not see the global deployment of US owned AI systems as the “democratizing”. She used a term she borrowed, techno-authoritarianism, to describe this era of minimally regulated AI spread. In properly naming the current landscape of expansionist exploitation by the big AI tech companies, countries and municipalities stand a better chance of effectively developing and implementing local governance solutions that work for their people. In my opinion, hers was the most optimistic talk of the week.